Inaudible vibrations sent from a tabletop up to 30 feet away can secretly command Apple's Siri or Google Assistant without the phone's owner knowing, academic researchers revealed last week.

"By exploiting the unique properties of acoustic transmission in solid materials, we design a new attack called SurfingAttack that allows multiple rounds of interaction between the voice-controlled device and the attacker over longer distances," the researchers introduce their findings on a dedicated website.

"SurfingAttack enables new attack scenarios, such as hijacking mobile short message service (SMS) passcodes or making ghost fraud calls without the owner being aware.

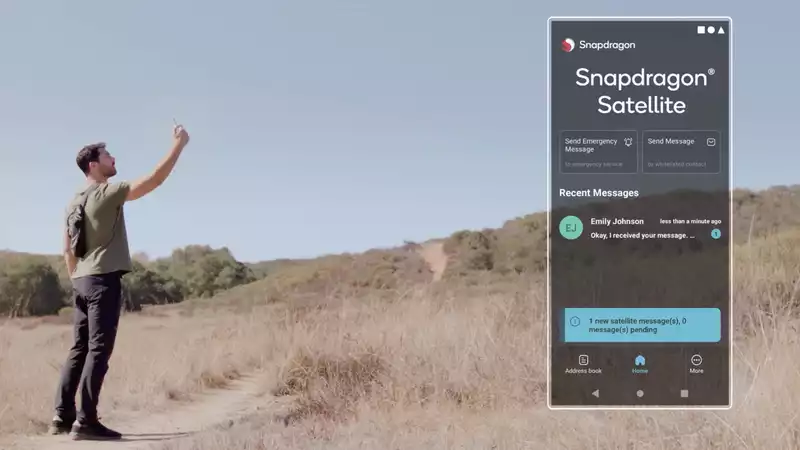

In a fun video, one of many posted by researchers on YouTube, a first-generation Google Pixel takes a selfie and reads out a text message (including a secret code for two-factor authentication), all from a table-top mounted transmitted through a flat speaker, responding to inaudible commands connected to an off-screen laptop.

The Pixel is even shown making a call to an older model iPhone in response to a hidden command; the Pixel's synthesized voice requests a secret access code from the iPhone owner, and Siri responds with that code.

Theoretically, a naughty office worker could attach a piezoelectric speaker to the bottom of a conference table during an important company meeting and send silent commands from his laptop to a colleague's phone as long as he is lying on the table, preferably unlocked can do so.

This attack--ahem, SurfingAttack--has been used on four iPhones (5, 5s, 6, X), the first three Google Pixels, three Xiaomis (Mi 5, Mi 8, Mi 8 Lite), Samsung Galaxy S7 and S9, Huawei's Honor View 8, and worked on 15 of the 17 models tested, including the Honor View 8.

If you don't have a curved phone, the researchers recommend placing your phone on a "soft fabric" or using a "thicker phone case made of less common materials like wood." Alternatively, disable access to the voice assistant when the phone is locked, and make sure the phone is locked when sitting in a long meeting.

There are other caveats. The silent commands sent from the tabletop still have to sound like your voice to work, but a good machine learning algorithm, or a skilled human impersonator, can pull it off.

Siri and Google Assistant respond to silent commands with your voice, so they will not escape your attention even if you are in a quiet room with people around who can hear you. However, silent commands also tell the voice assistant to turn down the volume, making it difficult to hear the response in a loud room but audible to the attacker's hidden microphone.

In any case, as Michigan State University researcher Qiben Yan told The Register, "Many people left their phones on the table."

"Furthermore, Google Assistant is not very accurate at matching a specific human voice," Yan added. We found that the Google Assistant on many smartphones could be activated and controlled by a random person's voice."

Previous studies of covertly activating voice assistants have involved sending ultrasonic commands in the air or laser beams focused on a smart speaker's microphone from hundreds of feet away. This is the first time, however, that an attack through a solid surface has been demonstrated.

Surfaces tested included tables made of metal, glass, and wood. The phone cases were not very effective in dampening the vibrations.

The study was conducted by Qiben Yan and Hanqing Guo of the SEIT Institute at Michigan State University, Kehai Liu of the Chinese Academy of Sciences, Qin Zhou of the University of Nebraska-Lincoln, and Ning Zhang of Washington University in St. Louis. Their academic paper can be read here.

Comments