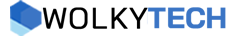

At the recent Search On event, Google announced that it is taking steps to reinvent the wheel with its search engine. as the world shifts to a more visual-first approach with apps like TikTok and Instagram, Google is finding new ways to integrate more visual ways for people to search and discover to find new ways to integrate more visual ways to search and discover.

Google Search already offers voice and image-based search, but the tech giant plans to expand this further to include more content types and more interactive features. One of the major changes in search engines will be the display of images and video content in search results.

Google is trying to make its search engine more fluid and interactive. On the company's blog (opens in new tab), Kathy Edwards, vice president of search, states, "We're working hard to make it possible to ask questions with fewer words, or to understand exactly what you mean without using any words at all."

For example, the Google app on iOS will have a shortcut under the search bar. These will include options such as "Identify songs by listening," "Solve homework with camera," "Find products with screenshots," and "Translate text with camera."

Other useful search features include a more developed autocomplete, which also pops up keyword and topic options to help identify things.

A major change is the way Google displays some of its results using different multimedia. So when you find the right words for your search, Google will highlight "the most relevant and useful information, including content from open web creators."

So, for example, if you search for a particular city, you will see stories and short videos by content creators highlighting tips on how to explore and enjoy that city.

According to Google, information comes from a variety of sources and is not categorized separately as text, images, or videos. The restructured search will be more interactive, allowing users to "discover" more information. According to the company, this feature will be available in the "coming months."

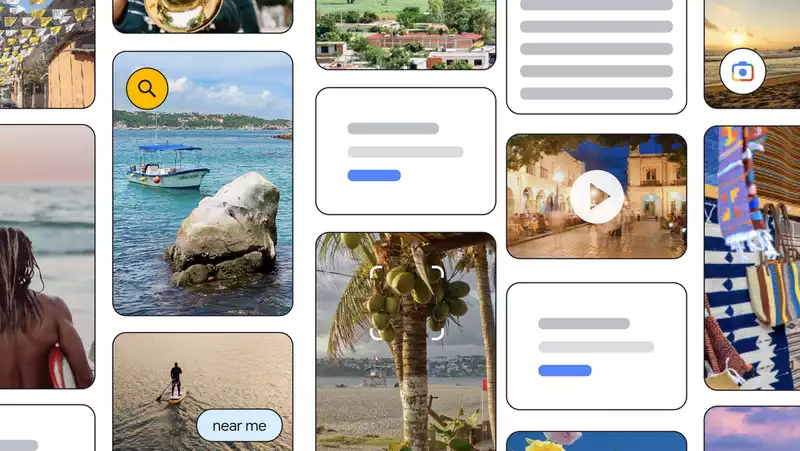

Google is also using advanced machine learning for some of its new translation features. There is a new Lens Translation update, which allows the camera to point at a poster in another language, which will translate the text and overlay it on the image itself.

Google is also making it easier to find specific foods near you. The new "Multi search near me" feature allows users to take a photo or screenshot of a dish and instantly find what is nearby.

Multi search is a feature that was already launched earlier this year, allowing users to search for specific items from screenshots using Lens. Now this tool has been extended, saving time browsing the menu looking for exactly what you want to eat.

Most of the new features will first be available to US users in the coming months on the iOS Google app before being rolled out more widely.

Next: a convenient upgrade to Google Search for quick searches. Also check out 11 hidden Google Search features that will make your life easier.

Comments