AI image generator Midjourney has announced a new feature that allows users to generate and extend additional visual elements around the images they create.

This feature, called Zoom Out, allows users to zoom out from a specific point in an image to see what is around it. This concept is similar to Photoshop's Generative Fill feature, which uses AI to nondestructively extend or remove content in an image.

The biggest difference is that Midjourney's Zoom Out only works on generated images, whereas Adobe's Photoshop can handle any image the user throws at it.

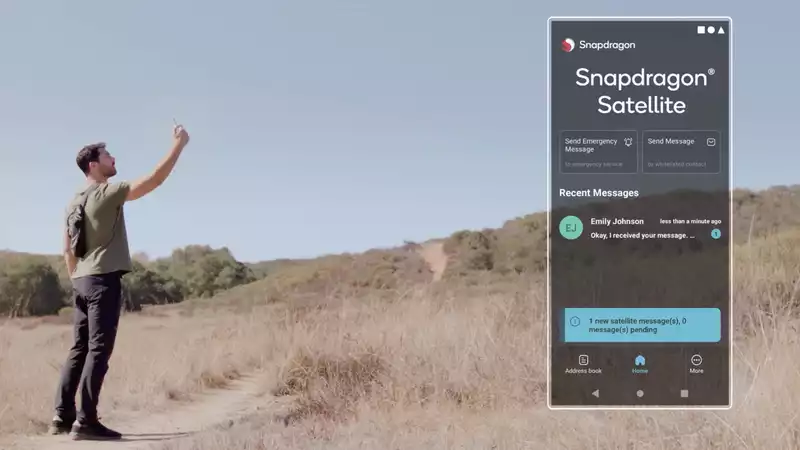

As with other AI image and video generators, you will first be prompted to ask Midjourney to generate an image. You will then be given the option to zoom out to 1.5x or 2x. These options should appear automatically after upscaling the generated image. In order to use zoom out, you must purchase at least a Basic subscription.

Another option is to use the 'Custom Zoom' button, which accepts a value between 1 and 2. Custom Zoom allows you to change the prompt before enlarging the image. I want the generated image to look like a picture frame hanging on the wall, and thanks to Midjourney v5.2 this is now possible.

Additionally, you can use the "Make Square" tool to adjust the aspect ratio of the artwork. If the original image is horizontal, Midjourney will generate an even more vertical scene. If the original aspect ratio is portrait (portrait), the AI will enlarge the image horizontally.

However, according to Midjourney, the maximum image size will not be 1024px x 1024px when using zoom out.

The online reaction to zooming out has been overwhelmingly positive. And I am not surprised.

Twitter user Matt Wolfe believes this new feature is the key to generating scenes of the same character. After a new character is generated, it can be placed in a different scene using zoom out.

Also, some people are already keen on directing AI movies using only text prompts and inserting the output in a regular video editor.

Naturally, Tom's Guide was keen to try this out for themselves.

So we designed a little stress test for AI. I wanted a character to take the lead role, and I set it up in such a way that zooming out would demonstrate its capabilities.

I started with the prompt: a rabbit sitting in his seat eating popcorn in a movie theater full of animals.

As expected, I got a selection of four different looking (but all incredibly cute) rabbits; not wanting to be distracted by the AI, I decided to upscale the image to produce the most complex-looking neighbor for my furry friend.

Then, as promised, I was given the option to use the "2x zoom," "1.5x zoom," or "custom zoom" buttons.

My Twitter feed was full of cinematic masterpieces, so I had high hopes; the 1.5x zoom version felt decent enough for a first attempt, and the 2.5x zoom was a nice touch. I saw how some of the remaining characters looked while keeping the same broad scenes and styling.

On the other hand, the more I thought about it, the more I felt like I was being shown an uncropped version of the image that should have been given to me first. If I were to stick with it, it seems that most of the cinema is occupied by what appear to be humans, not animals.

I then decided to try the 2x zoom version, which for some reason seemed to work better for this particular scene.

The last test left for me was a custom zoom of my image. I played the role of a director who had completely changed his mind about directing his scene. I asked for a 2x zoomed version of the previous scene, but I wanted everyone but the rabbit to be a hippopotamus.

The result was almost identical to our original 2x version, albeit with much less hippos.

Since this decision is based on this first attempt, it may have played out differently had we used a different prompt. For now, however, I think this works better if the main characters are placed in a more predictable and consistent environment, one where the AI can think of the surrounding scene as a pattern.

I tried to create a cast of individual characters next to the rabbit, but the AI had trouble generating multiple mini-scenes. Zooming out, less is more.

I wanted to test the limits of Midjourney.

But if you want to channel your inner Spielberg and create stunning magnified scenes that make your followers stop scrolling, definitely put more thought than I did into what you want to achieve from zooming out.

.

Comments